For those wondering why some artists were actually pro-NFT? Because of this concept. Tie the art to the artist with an online database to look it up.

But also: This is worthless. Because the credentials are tied to the image themselves. So either remove the metadata (which I would expect for privacy reasons) or run it through a very simple filter/quality downgrade to garble up the hidden pixels.

The ACTUAL solution to this is indeed the online database. But the artist registers the image to themselves and then google images or whatever does image recognition (similar to how you can get DMCA striked for singing a few words of a song) to match it to the database. Lower the quality and it still matches. And if they find your rendition of Kim Possible’s feet in an AI image, it can potentially give you some of the revenue from that.

But that wouldn’t require new proprietary hardware.

Why not go with some kind of certificate chain instead?

Here’s the image… signed… here’s who signed it.

Is it for edit/changes?

Here’s an image that was edited based on an earlier image. Here’s who signed that… and it’s base images hash which can then be looked up if they decided to see what those images were?

That is the metadata solution tied to the image itself. It doesn’t work because all I have to do is strip the metadata. This is why there is almost a ritualistic worship of certs in software development and internet traffic.

The key is that you need the validation to be decoupled from the image. Computer Vision is pretty much perfect f or this and is why I specifically referenced how DMCA violations are detected now. Google and Amazon do the scan, not the end user.

I think that’s not the problem that this technology is intended to solve.

It’s not a “Is this picture copied from someone else?” technology. It’s a “Did a human take this picture, and did anyone modify it?” technology.

Eg: Photographer Bob takes a picture of Famous Fiona driving her camaro and posts it online with this metadata. Attacker Andy uses photo editing tools to make it look like Fiona just ran over a child. Maybe his skills are so good that the edits are undetectable.

Andy has two choices: Strip the metadata, or keep it.

If Andy keeps the metadata, anyone looking at his image can see that it was originally taken by Bob, and that Fiona never ran over a child.

If Andy strips the metadata (and if this technology is widely accessible and accepted by social media, news sites, and everyday people) then anyone looking at the image can say “You can’t prove this image was actually taken. Without further evidence I must assume that it’s faked”.

I think spinning this as a tool to fight AI is just clickbait because AI is hot in the news. It’s about provenance and limiting misinformation.

Which does not solve that at all

Because the vast majority of “paparazzi” and controversy pictures aren’t taken by Jake Gyllenhal. They are taken by randos on the street with phones who when sell their picture to TMZ or whatever.

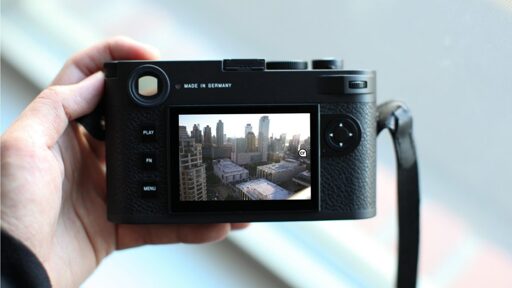

And they aren’t going to be paying for an expensive leica camera. And samsung and apple aren’t going to be licensing that tech.